Workshop on Deep reinforcement learning for infrastructure maintenance planning: Trends, opportunities and challenges

Organized by Daniel Straub and Kostas Papakonstantinou at the TUM Georg Nemetschek Institute - Artificial Intelligence for the Built World

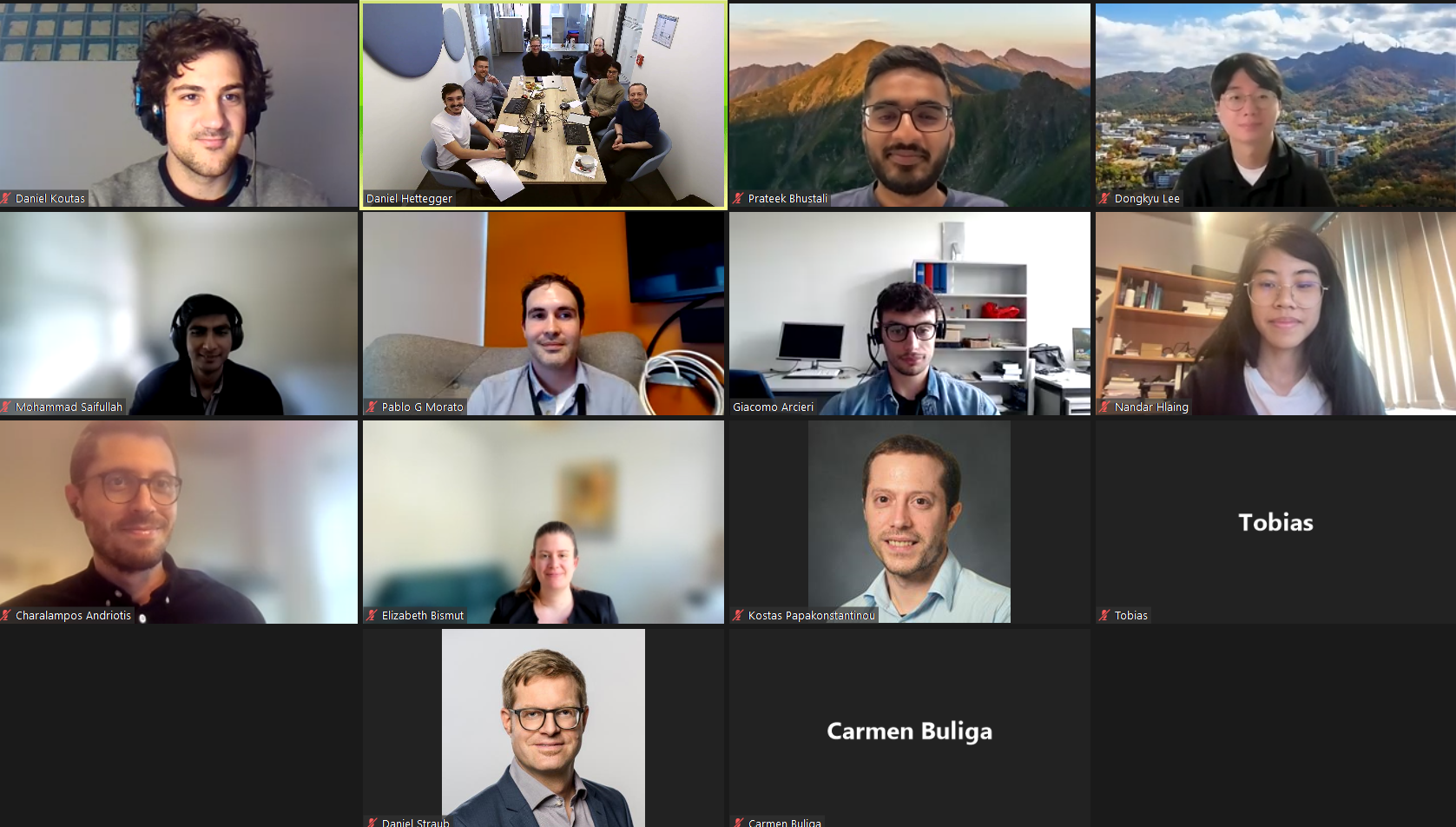

Deep reinforcement learning (DRL) has shown a large potential to enable efficient planning of operation and maintenance of engineering systems. Recent works have shown that the approach is potentially applicable to systems of a considerable size and complexity. This workshop gathered 23 experts and researchers to review the current state of the art and discuss the main research questions and steps towards real-life application of DRL for infrastructure management.

The first part of the workshop started out by talks from senior experts, who provided an overview on the current state of the art in research and the industry perspective. This lead over to a joint discussion on the current capabilities and limits of methods and the requirements by industry. The second part of the workshop started with presentations by PhD students that led into a discussion on the most relevant and pressing research questions. Student presentations involved different decentralized DRL approaches, robust solutions and inference alternatives, parallelized DRL training, and large system implementation under several constraints.

The participants discussed recent advances of DRL for infrastructure management and identified key challenges and promising future directions. These included scaling-up solutions to systems with thousands of components, the role of model uncertainty on DRL-based decisions and real-life implementation, different inference and computational techniques to propagate uncertainty and past history in large systems, policy interpretability implications, operational safety needs, and safe-RL. We also touched upon human intervention, feedback, and collaboration in the training and implementation phases, together with the practical realities of regulations and data quality issues. Additional topics were the potential promise and challenges of transfer learning, and algorithmic implementation issues, including the role of hyperparameters. Finally, it was agreed to jointly work towards common benchmarks that enable testing and comparing different algorithmic solutions.

Everybody stressed the usefulness of this exchange and it was agreed to have similar meetings from time to time to discuss, exchange ideas, and experiences, and report progress. Anybody who wants to be informed about future meetings can contact daniel.hettegger@tum.de.

Agenda

| Time | |

|---|---|

| 10:00 | Welcome |

| 10:05 - 10:30 | Presentation Kostas Papakonstantinou Multi-agent deep reinforcement learning for infrastructure management: Recent advances and future challenges |

| 10:35 - 11:00 | Presentation Olga Fink Safe multi-agent deep reinforcement learning for joint bidding and maintenance scheduling of generation units |

| 11:05 - 11:30 | Presentation Tobias Zeh AI-based asset management strategy - A practical approach |

| 11:30 - 12:00 | Discussion on opportunities and challenges |

| 12:00 - 14:00 | Lunch break |

| 14:00 - 16:00 | PhD presentations incl. discussions: Prateek Bhustali: Multiagent decision-making for scalable inspection & maintenance planning of deteriorating systems. Arcieri Giacomo: POMDP inference and robust solution via deep reinforcement learning: An application to railway optimal maintenance Daniel Hettegger: Investigation of Inspection and Maintenance Optimization with Deep Reinforcement Learning in Absence of Belief States Dongkyu Lee: Operation and Maintenance Planning for Lifeline Networks Using Parallelized Multi-agent Deep Q-Network. Mohammad Saifullah: Multi-Agent Deep Reinforcement Learning (MARL) for optimal decision-making for transportation systems and Value of Information Implications for MARL. |

| 16:00 - 16:15 | Poll on the most interesting / relevant research question |

| 16:15 - 16:45 | Discussion “What are the key research questions?” |

| 16:45 - 17:00 | Closure |

Group Photo